Render a Triangle with OpenGL

This post will discuss how to render a triangle with OpenGL. In the following, renderization of a triangle assumes modern OpenGL, i.e., the old, fixed-function pipeline is of no concern for us in this post, as we’ll be using OpenGL buffer objects and shaders.

A Simple Triangle

By following the tutorials in the previous posts (Setting up Eclipse CDT for OpenGL and the GLFW Example) we were able to create a minimal program displaying an empty window. Now we want to draw something with OpenGL on that window, specifically, a triangle. Why a triangle? Well, the geometric shape more frequently used to approximate surfaces is the triangle. Approximation of 3D surfaces in real-time graphics by means of simpler shapes is known as tessellation. For our tutorial purposes, a single triangle will suffice.

GPU Power

Modern GPUs are quite fast and can also have a considerable amount of dedicated memory. When rendering, we’d like for as much rendering data as possible to be read by the GPU directly from its local memory. In order to render a triangle with OpenGL we’ll need, obviously, to transfer the 3 vertices of the triangle to the GPU’s memory. However, we do NOT want our rendering to go like this:

- read a vertex from our computer RAM

- copy it to the GPU memory

- let the GPU process that single vertex

- and then repeat this whole process for the next vertex of the triangle.

Ideally, what we want is to transfer a batch of data to the GPU’s memory, copying all the triangle vertices, and then letting the GPU operate with this data directly from its local memory. In OpenGL we have the concept of Vertex Buffer Object (VBO) to represent these data placed on GPU’s memory.

The data to render the triangle in OpenGL

Normally, we think of a vertex as a point, which in 3D space leads to a representation with 3 coordinates, commonly designated by x, y and z. However, in this case I’d like to think of a vertex as a more abstract concept: a minimal data structure required to define a shape. Given a vertex, we can “link” attributes to it to further define our shape. Thereby, one of such attributes of a vertex can be its position (the “x, y, z values”.) Other attribute might be the vertex’s color. And so on. In this tutorial we will “link” two attributes to our vertices: position and color. For position we will have three coordinates, each a floating point value. If a float takes 4 bytes, then our position attribute would require 3 x 4 = 12 bytes. For the color attribute, we’d have 3 extra components, following the RGB model. Each color component would then take 4 bytes, and the color attribute would also require 12 bytes. In total, each vertex would take 24 bytes, 12 for its position attribute, and 12 for its color attribute.

Now we have to specify how to process these vertices.

The Rendering Pipeline

OpenGL rendering pipeline basically consists of a series of stages which operates one after the other, like a cascade (a simplified description, but it will suffice for now.) Each stage receives its input from the previous stage (the first stage receives your vertex data), and communicates its output to the next stage, until reaching the final goal: coloring pixels on screen.

What are Shaders?

Shaders are custom programs that will be executed by the GPU to transform your vertices, form geometric shapes, compute color of pixels, and other operations. For the sake of simplicity, I’ll focus only on the two “core” shaders: the vertex shader and the fragment shader. A vertex shader will receive a vertex (more specifically, the vertex’s attributes) and will transform them to yield some output. The GPU will take the data for all your vertices, will run several instances of the vertex shader in parallel, passing one vertex to each instance. It’s up to you to code in your vertex shader the functionality you require for your purposes (in this case, drawing a triangle).

A fragment shader is also a parallel program, but it operates on a fragment. A fragment, more or less, is a pixel (in detail, it’s all the data required to generate a pixel in the framebuffer.) A fragment shader will receive as input the custom output of previous shaders in the rendering pipeline, typically from the vertex shader. It will use these data to compute the color of a fragment. This means that the attributes we specified for our vertices (position and color) will be directly provided to the vertex shader but not to the fragment shader. If we want to access such data in the fragment shader we have to return them in custom outputs from the vertex shader, so they are passed down to all the other stages of the rendering pipeline, until reaching the fragment shader.

A shader in execution does not have access to the state of other running shaders (blindness property.) Besides, a shader does not “remember” or stores information from a previous execution (memoryless property.)

The high-level shading language for OpenGL is GLSL (GL Shading Language), and has an evident C vibe. A simple vertex shader for our purposes in GLSL is:

// GLSL Vertex Shader

#version 330 core

layout (location = 0) in vec3 position;

layout (location = 1) in vec3 color;

out vec3 vertexColor;

void main()

{

// gl_Position is a built-int GLSL output variable. The value

// we store here will be used by OpenGL to position the vertex.

gl_Position = vec4(position, 1.0);

// Just copy the vertex attribute color to our output variable

// vertexColor, so it's "propagated" down the pipeline until reaching

// the fragment shader.

vertexColor = color;

}

gl_Position is a standard OpenGL vertex shader output. Our above shader is truly simple: it does not modify the position, simply assigns it to gl_Position. Because gl_Position is a vector with 4 float components, we construct a new vec4 from position, which has 3 coordinates, and 1.0 for the 4th component of the vec4.

We use the output variable vertexColor to pass the attribute color down the rendering pipeline. That way, by having an input variable named vertexColor in our fragment shader, we will have access to this attribute in the fragment shader.

The layout keyword is very important. It assigns an index (a “location”) to a specific vertex attributte. In this case, we assigned location 0 to the vertex attribute position, and location 1 to the vertex attribute color. These indices will be required when we want to refer to those attributes from our C/C++ code.

A simple fragment shader would be:

// GLSL Fragment Shader

#version 330 core

out vec4 fragColor;

in vec3 vertexColor;

void main()

{

fragColor = vec4(vertexColor, 1.0f);

}

The variable vertexColor receives the final color that must be written in the framebuffer. This is the original color attribute that was received by our vertex shader and that it “propagated” down the pipeline.

Normalized Device Coordinates

OpenGL objects will be built and transformed in 3D space, but ultimately all of them must be projected on a plane: our screen. We define a space of Normalized Device Coordinates (NDC), which consists of the subspace bounded by planes x=-1, x=1, y=-1, y=1, z=-1, z=1. Shapes placed outside this space will be clipped and won’t appear on our screen. The center of this NDC space is (0,0,0). Let’s keep this in mind when defining the position of our triangle’s vertices. For instance, let’s use these position values for our vertices:

-0.5f, -0.5f, 0.0f, // x,y,z for bottom left vertex 0.5f, -0.5f, 0.0f, // x,y,z for bottom right vertex 0.0f, 0.5f, 0.0f // x,y,z for top vertex

Sending Vertices to the GPU

In order to render a triangle with OpenGL we now have to send the data to the GPU. Let’s first define our vertices with both attributes: position and color. We’ll place all of these data in an array:

float vertices[] = {

// For visualization: each row is a vertex.

// Each vertex has position [x, y, z] and color [r, g, b]

-0.5f, -0.5f, 0.0f, 1.0, 0.0, 0.0, // red color for this vertex

0.5f, -0.5f, 0.0f, 0.0, 1.0, 0.0, // green color

0.0f, 0.5f, 0.0f, 0.0, 0.0, 1.0 // blue color for our top vertex

};The next step is allocating memory in the GPU to store the vertices’ data. We’ll use the Vertex Buffer Objects (VBO) for that. By using these buffers we can send large batches of data all at once to the GPU. A VBO is an OpenGL object and we’ll get a unique ID to refer to it (a handle) by invoking glGenBuffers.

unsigned int vbo;

glGenBuffers(1, &vbo);Now we have to bind this buffer. By binding we avoid having to pass the buffer ID to each OpenGL function that needs to operate on the buffer. Once we bind a buffer the OpenGL functions will operate on the currently bounded buffer. As the type of the VBO is GL_ARRAY_BUFFER, we bind the buffer with:

glBindBuffer(GL_ARRAY_BUFFER, vbo);

It’s time to send our vertices to the GPU:

glBufferData(GL_ARRAY_BUFFER, sizeof(vertices), vertices, GL_STATIC_DRAW);

Finally, define how OpenGL should interpret the vertices we’ve just loaded on GPU memory.

// Interpretation of the first attribute: position glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 6 * sizeof(float), (void*) 0); // Interpretation of the second attribute: color glVertexAttribPointer(1, 3, GL_FLOAT, GL_FALSE, 6 * sizeof(float), (void*)(3 * sizeof(float)));

The first parameter of glVertexAttribPointer is the “location” of the vertex attribute we want to configure. This must match the layout (location = index) in the vertex shader (or you can use the OpenGL function glGetAttribLocation to retrieve the location.) The second parameter is the size of the vertex attribute. As our position attribute is a vec3, it’s composed of 3 values, so this parameter must be a 3. The third parameter is the type of data. In GLSL, types vec3 are GL_FLOAT. Next parameter is a boolean specifying if we should normalize the data. The fifth parameter is the stride. This specifies how much data should be skipped in the buffer to arrive to the bytes corresponding to the attribute for the next vertex. In our case, the position attribute for the next vertex will come up after the six floats of the attributes of the current vertex (3 floats for position, 3 floats for color). Therefore, the stride is 6 * sizeof(float). If data is tightly packed, setting this parameter to 0 tells OpenGL to determine the stride by itself. The final parameter is the offset, the starting point of vertex data in the buffer. As the first byte marks the start of our position attribute, we have no offset for position and pass (void*) 0. However, for color, we have an offset: we need to skip the data for the position attribute, i.e., we’ll have an offset of 3 * sizeof(float). Remember that each shader is run in parallel. Then each of these parallel instances will receive the data for the vertex’s attributes. When we advance in the buffer following the stride, we arrive to the data of the next vertex.

Vertex attributes are disabled by default. So we have to call glEnableVertexAttribArray(0)and glEnableVertexAttribArray(1) in order to enable the attributes with index 0 (position) and index 1 (color), respectively.

All these functions operate on the currently bound buffer. That’s why we had to previously call glBindBuffer.

Vertex Array Objects

Technically we already have all the required information and configuration to draw the triangle. We’ve created a buffer, stored vertex data there, and also specified how to interpret those data. This procedure has to be carried out for each shape we want to draw, all the time. For instance, to draw our triangle A we execute all the above calls. Then, to draw other shape B we also have to run these steps for the specific case of B. And if we want to draw A again we have to re-run all these steps again. And so on. The process is cumbersome. OpenGL provides another concept for simplifying this: the Vertex Array Object (VAO). We proceed like follows: create a VAO, bind it, and then execute the VBO creations and the other steps we’ve described above. After that, when we want to draw some shape, simply bind its VAO and then invoke the draw functions. That’s all.

unsigned vao;

glGenVertexArrays(1, &vao);After the above VAO creation, proceed with the steps for the VBO and the vertex’s attributes. Note that we need a VAO per each object we want to draw.

// To draw our triangle, just bind the VAO and then invoke glDrawArrays

glBindVertexArray(vao);

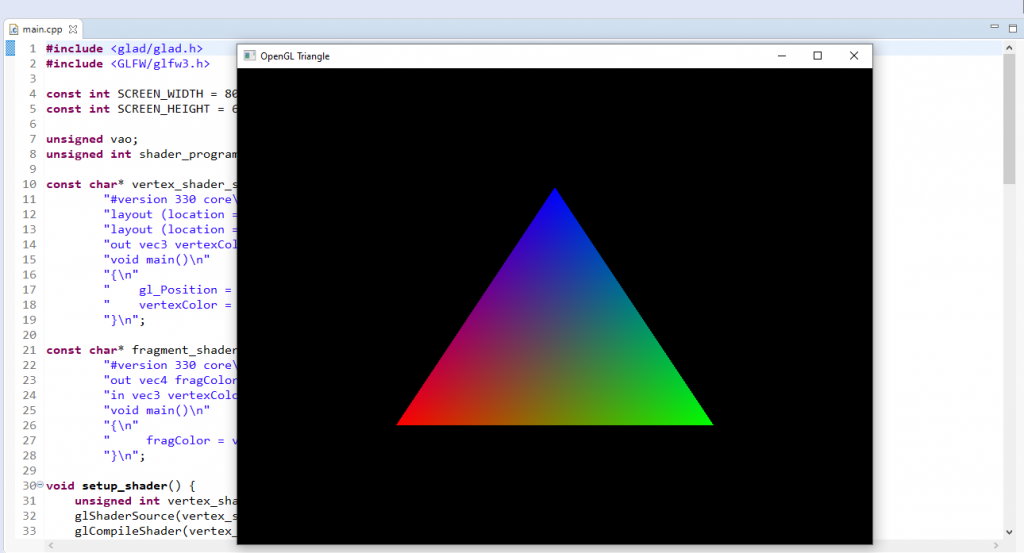

glDrawArrays(GL_TRIANGLES, 0, 3);Finally, render a triangle with OpenGL

This is the full code to render a triangle with OpenGL, using what we’ve discussed before:

#include <glad/glad.h>

#include <GLFW/glfw3.h>

const int SCREEN_WIDTH = 800;

const int SCREEN_HEIGHT = 600;

unsigned vao;

unsigned int shader_program;

const char* vertex_shader_source =

"#version 330 core\n"

"layout (location = 0) in vec3 position;\n"

"layout (location = 1) in vec3 color;\n"

"out vec3 vertexColor;\n"

"void main()\n"

"{\n"

" gl_Position = vec4(position, 1.0);\n"

" vertexColor = color;\n"

"}\n";

const char* fragment_shader_source =

"#version 330 core\n"

"out vec4 fragColor;\n"

"in vec3 vertexColor;\n"

"void main()\n"

"{\n"

" fragColor = vec4(vertexColor, 1.0f);\n"

"}\n";

void setup_shader()

{

unsigned int vertex_shader = glCreateShader(GL_VERTEX_SHADER);

glShaderSource(vertex_shader, 1, &vertex_shader_source, NULL);

glCompileShader(vertex_shader);

unsigned int fragment_shader = glCreateShader(GL_FRAGMENT_SHADER);

glShaderSource(fragment_shader, 1, &fragment_shader_source, NULL);

glCompileShader(fragment_shader);

shader_program = glCreateProgram();

glAttachShader(shader_program, vertex_shader);

glAttachShader(shader_program, fragment_shader);

glLinkProgram(shader_program);

glDeleteShader(vertex_shader);

glDeleteShader(fragment_shader);

}

void setup_data()

{

float vertices[] = {

// For visualization: each row is a vertex.

// Each vertex has position [x, y, z] and color [r, g, b]

-0.5f, -0.5f, 0.0f, 1.0, 0.0, 0.0, // red color for this vertex

0.5f, -0.5f, 0.0f, 0.0, 1.0, 0.0, // green color

0.0f, 0.5f, 0.0f, 0.0, 0.0, 1.0 // blue color for our top vertex

};

glGenVertexArrays(1, &vao);

glBindVertexArray(vao);

unsigned int vbo;

glGenBuffers(1, &vbo);

glBindBuffer(GL_ARRAY_BUFFER, vbo);

glBufferData(GL_ARRAY_BUFFER, sizeof(vertices), vertices, GL_STATIC_DRAW);

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 6 * sizeof(float), (void*) 0);

glEnableVertexAttribArray(0);

glVertexAttribPointer(1, 3, GL_FLOAT, GL_FALSE, 6 * sizeof(float), (void*)(3 * sizeof(float)));

glEnableVertexAttribArray(1);

}

int main(void)

{

GLFWwindow* window;

if ( ! glfwInit() )

{

return -1;

}

glfwWindowHint(GLFW_CONTEXT_VERSION_MAJOR, 3);

glfwWindowHint(GLFW_CONTEXT_VERSION_MINOR, 3);

glfwWindowHint(GLFW_OPENGL_PROFILE, GLFW_OPENGL_CORE_PROFILE);

window = glfwCreateWindow(SCREEN_WIDTH, SCREEN_HEIGHT, "OpenGL Triangle", NULL, 0);

if ( ! window )

{

glfwTerminate();

return -1;

}

glfwMakeContextCurrent(window);

if ( ! gladLoadGLLoader((GLADloadproc)glfwGetProcAddress) )

{

glfwTerminate();

return -1;

}

setup_shader();

setup_data();

while ( ! glfwWindowShouldClose(window) )

{

glClear(GL_COLOR_BUFFER_BIT);

glUseProgram(shader_program);

glBindVertexArray(vao);

glDrawArrays(GL_TRIANGLES, 0, 3);

glfwSwapBuffers(window);

glfwPollEvents();

}

glfwTerminate();

return 0;

}

And this should be the output of such program:

Homework: Research about Element Buffer Objects (EBO). Esentially, an EBO is an optimization mechanism to avoid passing redundant vertices to the GPU. You can include an EBO in a VAO, which is really nice.